In a recent project, I was looking into various approaches on how to provide a Github Actions pipeline access to an internal RDS database for integration testing of a web application. One of the approaches I tried was from this AWS Article on using SSM with IAM Authentication for RDS.

IAM database authentication relies on using IAM roles and policies to control access to the database. We don’t need to create or manage database passwords. For Postgresql, this involves attaching the rds_iam role to the database user. RDS generates an authentication token which is valid for 15 minutes.

The setup include the following resources:

- a VPC with private, public and database subnets

- an RDS Postgresql database, provisioned into the database subnet

- an EC2 that serves as a jumphost, with SSM agent installed and the AmazonSSMManagedInstanceCore policy added to the instance profile role. The instance is provisioned into the private subnet

- an IAM policy attached to the DB login role or user with rds-db:connect action added as a policy

The resources are created and managed using Terraform.

The RDS is a aurora serverless postgresql cluster with a single instance:

resource "aws_rds_cluster" "postgresql" {

cluster_identifier = "aurora-test"

engine = "aurora-postgresql"

engine_version = "17.5"

availability_zones = module.vpc.azs

db_subnet_group_name = module.vpc.database_subnet_group_name

database_name = var.db_name

master_username = var.db_username

# set below to true to allow DB secrets to be stored in secrets manager

manage_master_user_password = true

skip_final_snapshot = true

storage_encrypted = true

vpc_security_group_ids = [aws_security_group.allow_rds.id]

iam_database_authentication_enabled = true

serverlessv2_scaling_configuration {

max_capacity = 16.0

min_capacity = 0

seconds_until_auto_pause = 300

}

}

resource "aws_rds_cluster_instance" "cluster_instances" {

cluster_identifier = aws_rds_cluster.postgresql.id

instance_class = "db.serverless"

engine = aws_rds_cluster.postgresql.engine

engine_version = aws_rds_cluster.postgresql.engine_version

db_subnet_group_name = module.vpc.database_subnet_group_name

ca_cert_identifier = "rds-ca-rsa4096-g1"

}Note the use of the serverlessv2_scaling_configuration which scales the dataabase capacity down to 0 when there are no active connections. We need to set iam_database_authentication_enabled to true to turn on IAM database authentication

Next, we create a custom IAM policy that grants the rds-db:connect action to the database user to login with. We attach this custom policy to the target role or user:

data "aws_iam_policy_document" "rds_db_iam" {

statement {

effect = "Allow"

actions = [

"rds-db:connect"

]

resources = [

"arn:aws:rds-db:eu-west-2:${data.aws_caller_identity.current.account_id}:dbuser:${aws_rds_cluster.postgresql.id}/${var.db_iam_user}"

]

}

}Note that the var.db_iam_user variable refers to the database user to login as. This is separate from the master username we create the database with originally.

After both the RDS cluster and EC2 jumphost are provisioned, we need to SSM into the jumphost and create the database login user using the following commands:

sudo yum update -y

sudo dnf install postgresql17 -y

PGPASSWORD="XXXX" psql --host=$RDS_HOSTNAME --port=5432 --dbname=flaskapp_test --username=flaskapp_test << SQL

CREATE USER ${var.db_iam_user};

GRANT rds_iam to ${var.db_iam_user};

GRANT ALL ON SCHEMA public TO ${var.db_iam_user};

SQLNote that we need to apply the rds_iam postgresql permission set to the database user. We also grant the database user permission to read and write to the database via the grant on public schema.

To test the login on localhost, we need to have both the AWS cli installed with the session manager plugin as per the guide on Session Manager plugin for AWS cli:

curl "https://s3.amazonaws.com/session-manager-downloads/plugin/latest/ubuntu_64bit/session-manager-plugin.deb" -o "session-manager-plugin.deb"

sudo dpkg -i session-manager-plugin.deb

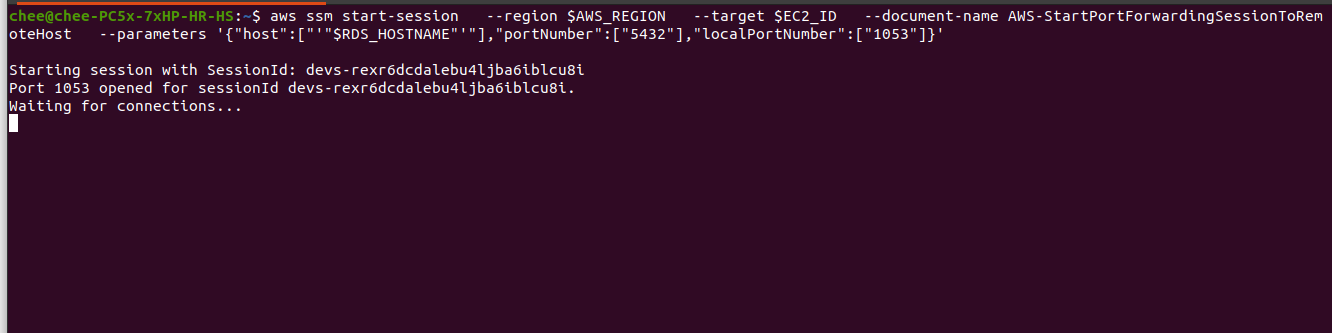

session-manager-pluginThe session manager plugin uses remote port forwarding to connect to a remote host running SSM and from the remote host, connects to the RDS Postgresql instance. In our example, we are starting a local SSM session which targets the EC2 Jumphost created earlier and using the SSM document AWS-StartPortForwardingSessionToRemoteHost which takes as parameters the remote hostname; the remote port number; and the local port number. We set the remote host to be the target endpoint of the RDS cluster; port of 5432; and a local port of 1053.

aws ssm start-session --region $AWS_REGION \

--target $EC2_ID \

--document-name AWS-StartPortForwardingSessionToRemoteHost \

--parameters '{"host":["'"$RDS_HOSTNAME"'"],"portNumber":["5432"],"localPortNumber":["1053"]}'

What this means is that any traffic running on port 1053 on localhost will be forwarded to the SSM session. Note that as of this writing, I am unable to run this command in a script as it would result in no connections being received.

Next, in a separate terminal window, we make a call to the generate-auth-token action using the AWS CLI to obtain an authentication token for database login. Note that this token is only valid for 15 minutes:

export PGPASSWORD="$(aws rds generate-db-auth-token --hostname $RDS_HOSTNAME --port $MASTER_DB_PORT --region $AWS_REGION --username $RDS_USERNAME)"We set the RDS_USERNAME to be the database login user i.e. the username used in GRANT rds_iam.

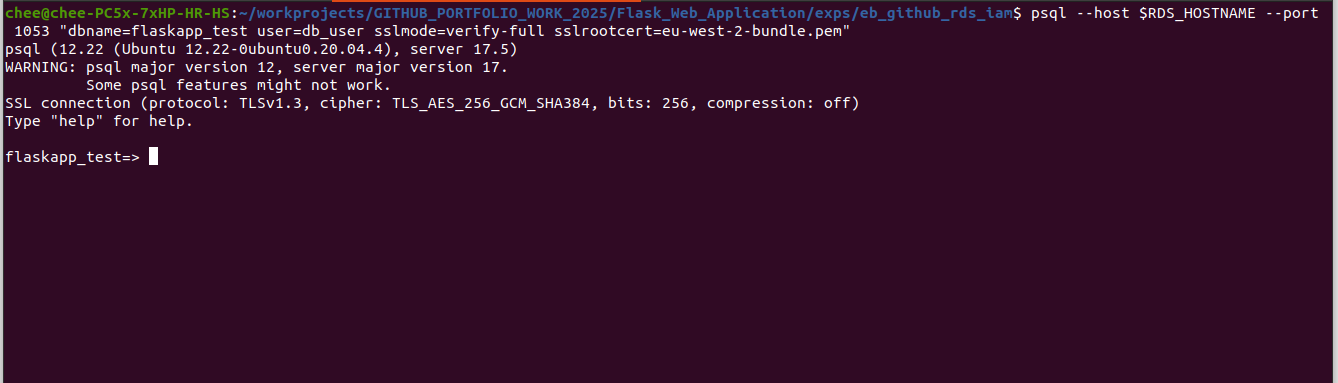

Note that since a ca_cert_identitifer has been applied to the instance, we need to pass in the root certificate to connect to the database. The CA certs can be downloaded from RDS CA certificates:

echo "127.0.0.1 $RDS_HOSTNAME" >> /etc/hosts

psql --host $RDS_HOSTNAME \

--port 1053 \

"dbname=flaskapp_test user=db_user sslmode=verify-full sslrootcert=eu-west-2-bundle.pem"Since we have enabled TLS on the RDS instance by setting the CA cert identitier, we need the hostname parameter to psql to match the hostname value in the certificate. We add a mapping entry to the /etc/hosts which maps localhost to the RDS hostname.

If successful, we should see the psql console as below:

While the above approach is more secure than setting database credentials, it suffers from the following drawbacks for my own use case:

-

The database authentication token needs to be regenerated once it has expired. This means we are unable to obtain a persistent connection for running applications or tests.

-

There is some network latency between running the SSM port forwarding session locally and forwarding the traffic to the remote RDS instance.

The main advantage of this approach is the use of IAM roles and policies for authentication and authorization which lends itself to auditing of permissions. It also simplifies the process of adding new users or roles to allow access to the database.

In my opinion, the approach described here is better suited for running one-off tasks on remote databases such as running ad-hoc queries or some form of data extraction process.

Further articles will present different approaches to connect to internal RDS databases from external sources.